vSphere 7 onwards provides vSphere with Kubernetes (formerly Project Pacific). Natively it supports VMs and containers on vSphere. Tanzu Kubernetes Grid Service (TKGS) helps to run fully compliant and conformant Kubernetes with vSphere. vSphere Pod runs natively on vSphere whereas Tanzu Kubernetes Cluster (TKC) is a managed cluster by the Tanzu Kubernetes Grid Service, with the virtual machine objects deployed inside of a vSphere Namespace.

A rolling update can be initiated to Tanzu Kubernetes Cluster (TKC), including the Kubernetes version, by updating the Tanzu Kubernetes release, the virtual machine class or storage class. In this article, I will be doing version update of Kubernetes cluster on vSphere.

Let's first inspect the environment: We are having three separate Kubernetes cluster inside vSphere namespace validation-test-1. Below span is taken from vSphere Client UI.

Let's now check the versions and update path of Tanzu Kubernetes Cluster (TKC). To view this, we need to login to the SupervisorControlVM. If you are not sure how to login to SupervisorControlVM,

this article will help you.

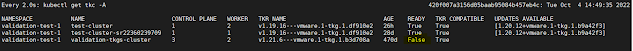

[ ~ ]# kubectl get tanzukubernetesclusters -A

or

[ ~ ]# kubectl get tkc -A

Let's now check the list of available Tanzu Kubernetes Releases:

[ ~ ]# kubectl get tanzukubernetesreleases

or

[ ~ ]# kubectl get tkr

To make the Kubernetes versions available, first we need to download the releases from

here. Then from vSphere Client UI, a Content Library needs to be created where we should upload the releases files downloaded from the link. Once the releases are downloaded from VMware repository and uploaded in vSphere Client UI, releases will be available under Content Library section.

To initialize the update, lets edit the Kubernetes cluster manifest file and put the release version details appropriately like below:

[ ~ ]# kubectl edit tanzukubernetesclusters validation-tkgs-cluster -n validation-test-1

Once I have the manifest file in edit mode, first I need to check the API version. API version defines how to put the release names. Check the API reference

here. Then in spec section, update the Kubernetes release version accordingly. Lastly, in topology >> tkr >> reference section, update the release version. In latest version, it supports to have different Kubernetes release version in ControlPlaneNode and WorkerNode. For uniformity, I am using the same version details in all three sections.

apiVersion: run.tanzu.vmware.com/v1alpha2

kind: TanzuKubernetesCluster

spec:

distribution:

fullVersion: v1.21.6---vmware.1-tkg.1.b3d708a

version: ""

topology:

controlPlane:

replicas: 3

storageClass: tanzu-policy

tkr:

reference:

name: v1.21.6---vmware.1-tkg.1.b3d708a

vmClass: best-effort-large

nodePools:

- name: workers

replicas: 2

storageClass: tanzu-policy

tkr:

reference:

name: v1.21.6---vmware.1-tkg.1.b3d708a

vmClass: best-effort-large

Now, while monitoring the cluster status, it will be not in ready state and doing a rolling update to all of the ControlPlane nodes and Worker nodes.

A series of actions can also be reviewed from vSphere Client UI

Cluster release version before update

Cluster release version after update

Once update completed in all three clusters

Here is the official documentation link for reference.

Cheers 😎

No comments:

Post a Comment